Safe navigation

Interactive Learning on Safe Navigation in Cluster Dynamic Environments

This is a GaTech course research project that aims to investigate different safe reinforcement learning (RL) approaches for a robot to navigate safely in dynamic cluster environments. Here’s the project paper link: Interactive Learning on Safe Navigation in Cluster Dynamic Environments.

Abstract

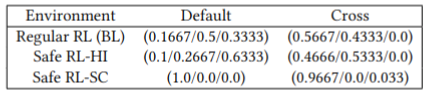

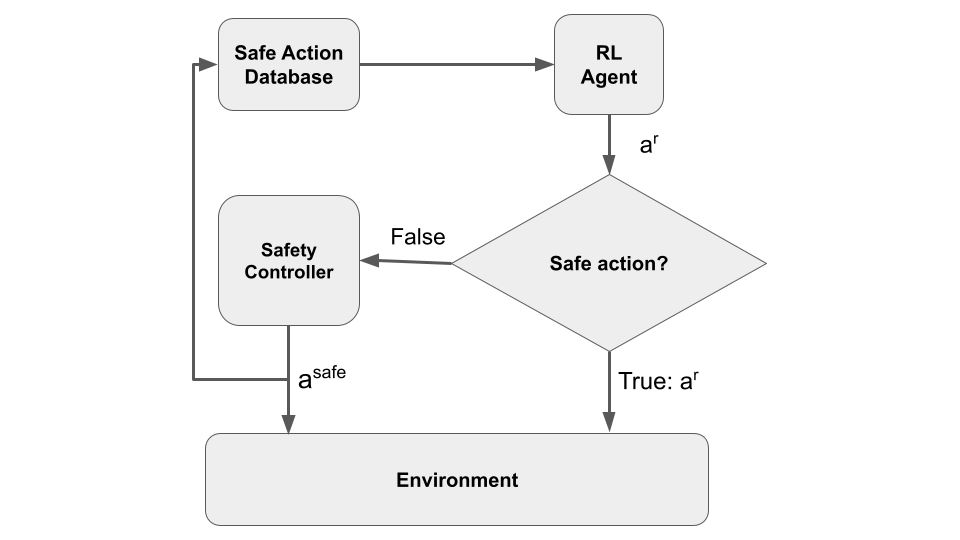

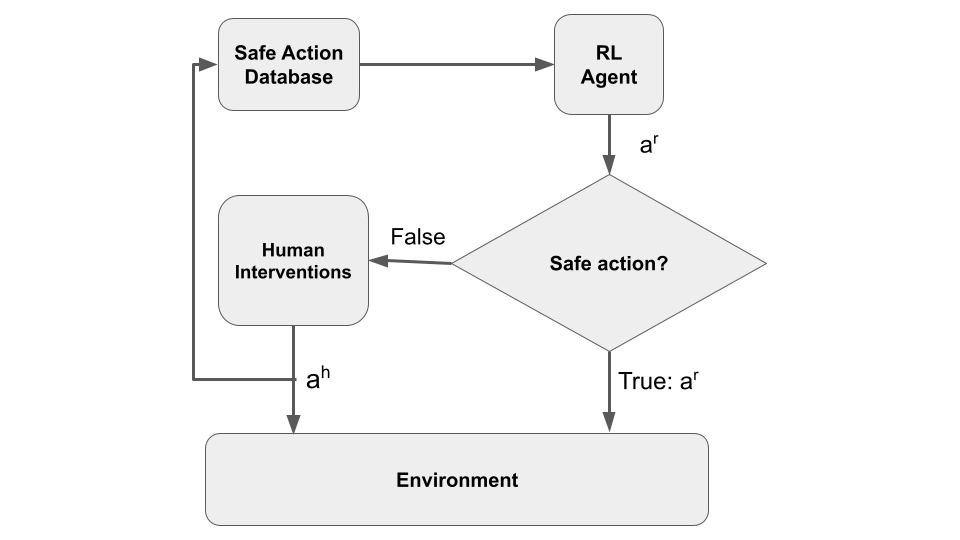

Safe navigation is essential for mobile autonomous systems to deploy in real-world environments. In this paper, we want to investigate different safe reinforcement learning (RL) approaches for a robot to navigate safely in dynamic cluster environments. Based on the same learning framework where safe action is used, if the RL agent provides an unsafe action, we developed two different approaches: one uses an optimization-based safety controller to produce safe actions, the other uses human interventions as safe actions. Our experiment results indicate that the optimization-based safety controller can safeguard the robot from collision, but the approach using human interventions achieves very similar performance as regular RL.

Methodology

Results