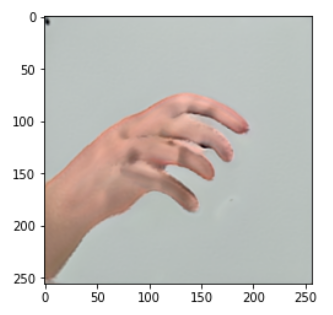

Hand Synthesis

This is an on-going project advised by professor Greg Turk. The existing popular generative models (e.g., StyleGAN, Stable Diffusion) perform very poorly in synthesizing hands, which have complicated geometry. Therefore, the research aims to use generative models to synthesize lifelike hands via leveraging some additional information in the input data, including:

- keypoints positions

- skeletons

- dorsal/ventral

- left/right

- …

Here’s the preliminary research proposal (drafted in August 2022): proposal.

One synthesized hand via StyleGAN with our methods.