Cross-modal data augmentation

Discriminative Cross-Modal Data Augmentation for Medical Imaging Applications

This is a research project that aim to mitigate the data deficiency issue via cross-modality data augmentation in the medical imaging domain. Here’s the Paper Arxiv link: Discriminative Cross-Modal Data Augmentation for Medical Imaging Applications.

Abstract

While deep learning methods have shown great success in medical image analysis, they require a number of medical images to train. Due to data privacy concerns and unavailability of medical annotators, it is oftentimes very difficult to obtain a lot of labeled medical images for model training. In this paper, we study cross-modality data augmentation to mitigate the data deficiency issue in the medical imaging domain. We propose a discriminative unpaired image-to-image translation model which translates images in source modality into images in target modality where the translation task is conducted jointly with the downstream prediction task and the translation is guided by the prediction. Experiments on two applications demonstrate the effectiveness of our method.

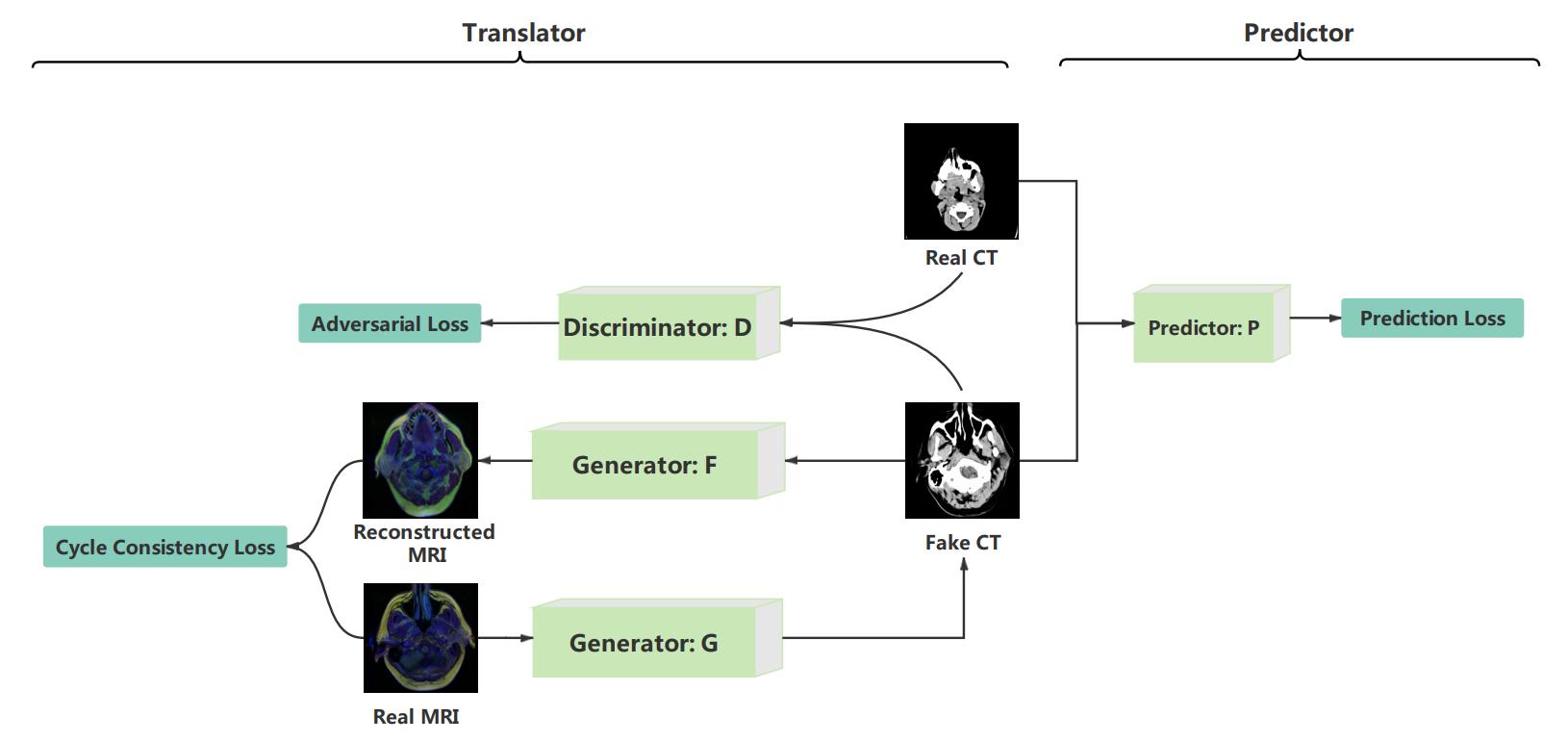

Methodology

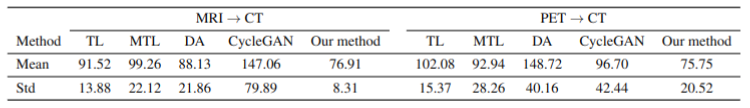

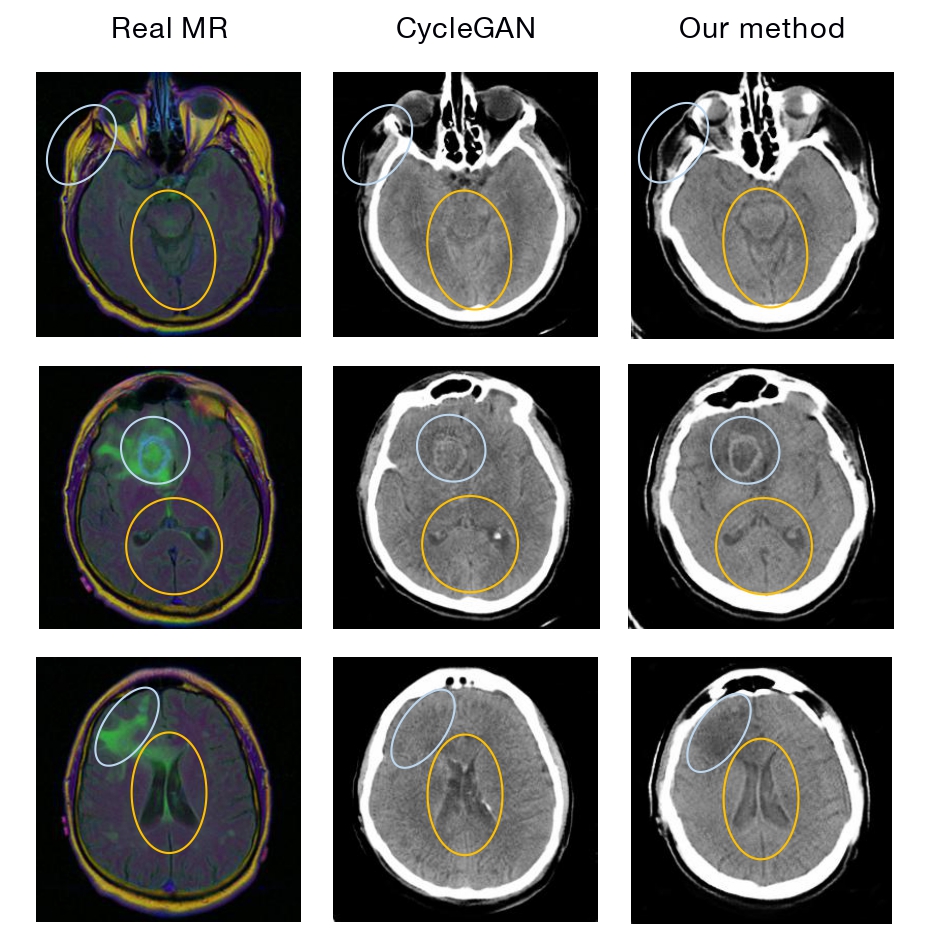

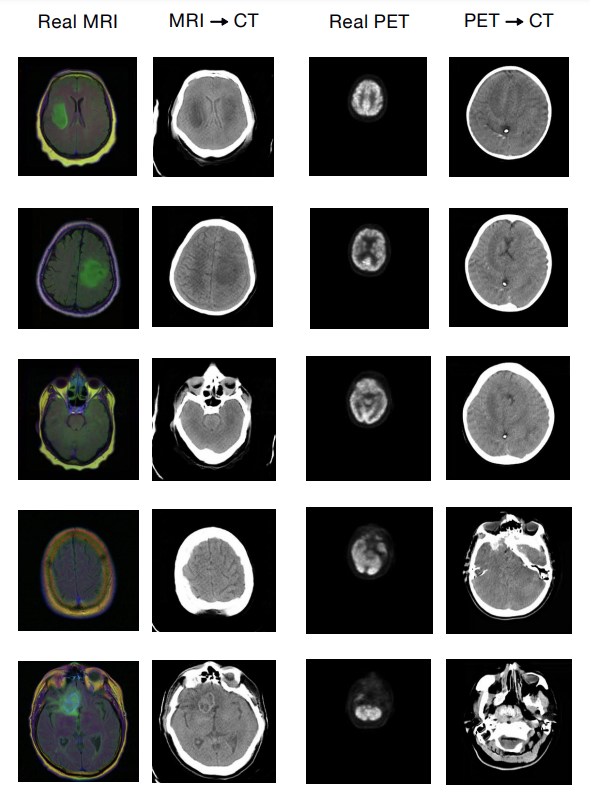

Results

Citation

@article{yang2020discriminative,

title={Discriminative Cross-Modal Data Augmentation for Medical Imaging Applications},

author={Yang, Yue and Xie, Pengtao},

journal={arXiv preprint arXiv:2010.03468},

year={2020}

}